VMware Cloud Foundation 4.4.0.0 went GA on the 10th of Feb 2022 so very recently. There’s few interesting new features and capabilities looking at the release notes and I’m only going to quote what in my opinion are the most interesting.

- from VCF 4.4 the vRealize Suite products have been decoupled from the VCF BOM, which effectively means that it’s now possible to upgrade components such as vRLI, vROps or vRA directly from vRealize Suite Lifecycle Manager (vRSLCM) which, on VCF 4.4 is 8.6.2. See VCF 4.4 BOM

- LCM pre-checks are now going to perform file system capacity as well as password checks

- Log4j fixes and another Apache HTTP fix (VCE-2021-40438)

- User Activity Logging: New activity logs capture all the VMware Cloud Foundation API invocation calls, along with user context. The new logs will also capture user logins and logouts to the SDDC Manager UI

- Enhancements to reduce the SDDC Manager CPU and memory usage

Pre-checks

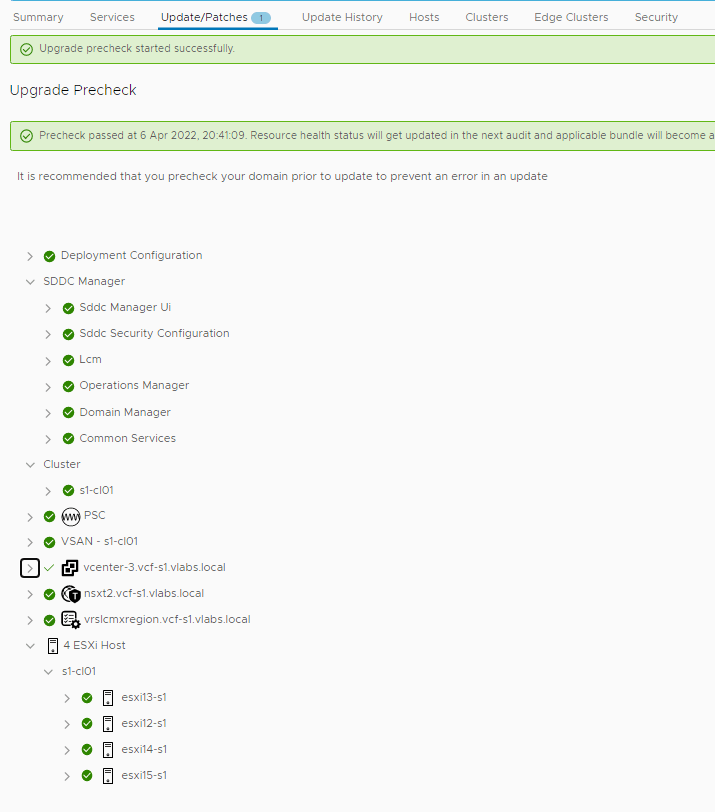

One of the new feature of 4.3 is that, once you have downloaded multiple bundles, in my case I had 4.4.0.0 and 4.3.1.1 you go about running the pre-checks, it will ask you which version you want to precheck against. Here you can see I’m selecting the 4.4.0.0 as skip-level target version.

Pre-checks are looking good

As you can see from the following screenshot, my setup is currently at 4.3.0.0

Let’s schedule the bundle and roll

SDDC Manager Bundle

One strange behaviour I noticed immediately was that the Management Workload Domain was displayed as being at 4.3.0 when in fact the SDDC Manager is running 4.3.1.1-19235535

SDDC Manager Configuration Drift Bundle

Before I applied the 4.4.0.0 SDDC Configuration Drift bundle I hit the following problem.

Basically, SDDC Manager was checking for my Microsoft Certificate Authority server to have a matching SAN certificate field, which it didn’t. SDDC Manager was in fact pointing to the CA via FQDN however the actual certificate itself was issues without the SAN attribute (required for FQDN).

First of all, I had to amend the CA Server URL from SDDC Manager > Security > Certificate Management

adding a DNS alias record on the DNS Server

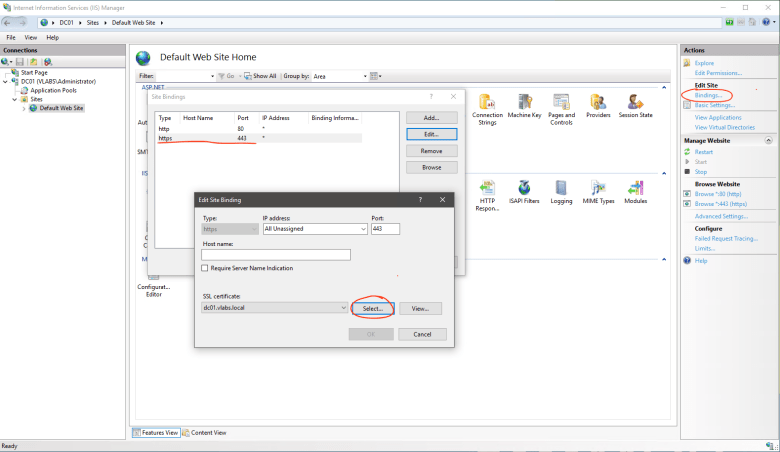

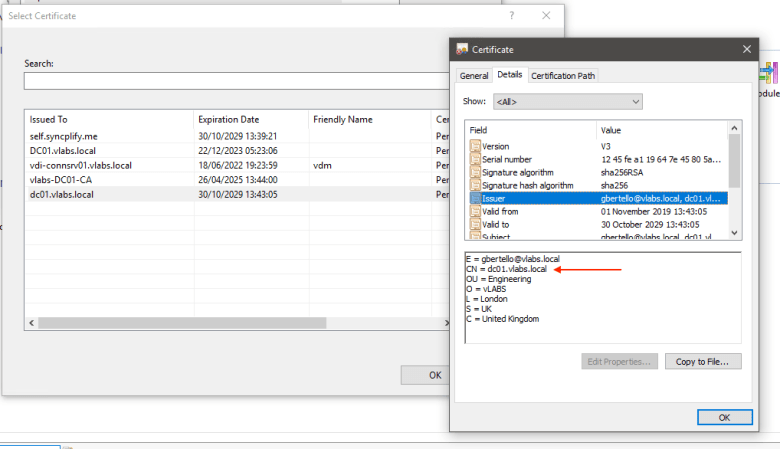

After which I had to change the certificate of the IIS website itself

such that the CN name on the certificate matched the FQDN (see red arrow)

I also had to decommission the unmanaged hosts because I kept on receiving the following alert on the SSL certificate, for which I couldn’t easily find a fix. As they were unmanaged, it was relatively painless to remove them from SDDC Manager.

The backup user password on SDDC Manager was also expired so had to change using passwd and then remediate from SDDC Manager GUI as following.

and finally all prechecks cleared

Lastly I had this annoying vCenter Server Inventory Status RED alert for the vAPI Endpoint

Rebooting did not fix this problem either. I could not figure out why I was getting this error but thing is it eventually ignore the problem and said it was ok to continue

The bundle eventually failed. Checking the SDDC Manager LCM bundle logs folder at /var/log/vmware/vcf/lcm/thirdparty/upgrades/f513d76a-ed12-465e-9a42-b17ba3fec164/sddcmanager-migration-app/logs. There was a complain saying NSX-T which couldn’t find one Edge Transport Node. That’s when I remembered I did move two edge transport nodes (originally deployed during bringup) into a new edge cluster because I added four extra edges on the original cluster. Had to move them back and bingo

4.4.0.0 Configuration Drift #2 Bundle [UPDATE May 2022]

Since the release of VCF 4.4.0.0 an issue appeared with an inconsistency in the VMware Update Manager database after VCF deletes the ESXi patch (iso) and baseline used to perform the ESXi update. For this reason, a second 4.4.0.0 configuration drift bundle was released. See https://kb.vmware.com/s/article/87826

NSX-T 3.1.3.1 Upgrade (wrong version due to the bug)

The following screenshots were taken before I realised there was a bug which pushed the wrong NSX-T version for VCF 4.4.0

followed by the Hosts Upgrade

The total time for my NSX-T upgrade of 4 hosts and 1 NSX Manager nested was 1h 34 minutes, all nested.

NSX-T 3.1.3.5 (correct version for VCF 4.4)

In my case with two cluster (a management and a VI empty) it took around 1h and 40 minutes.

vCenter Server 7.0 Update 3c

https://docs.vmware.com/en/VMware-vSphere/7.0/rn/vsphere-vcenter-server-70u3c-release-notes.html

I did hit a couple of bugs more precisely

vmware-perfcharts service failed to start after upgrade vCenter Server (85865)

I was going around in circles until I realised VMware Update Manager (VUM) was misbehaving. The service vmware-updatemgr kept on crashing on the management vCenter. After a lot of troubleshooting and attempts, I was finally able to fix the problem by resetting the VUM database using the following commands:

service-control --stop vmware-updatemgr

/usr/lib/vmware-updatemgr/bin/updatemgr-utility.py reset-db

rm -rf /storage/updatemgr/patch-store/*

service-control --start vmware-updatemgrESXi Host 7.0 U3c

Finally, it’s ESXi upgrade time. This time I’m going to remediate two clusters in parallel.

Here you can see the progress

Unfortunately, in my nested lab something always break meh 🙁

One of the hosts failed even though the upgrade actually completed.

I fixed this issue using this KB https://kb.vmware.com/s/article/87748 “VCF 4.4 Manifest does not contain patch bundles causing skip upgrade to stop at 4.3.1 (87748)”

vRealize Suite Lifecycle Manager 8.6.2

Last but not least, we need to update vRSLCM