DISCLAIMER this post is based on VCF on VxRack SDDC. VCF DIY / ready-nodes might be less prescriptive in terms of constraints or have different supported configurations.

When a workload domain (WLD) is provisioned in Cloud Foundation from SDDC Manager, a default VM storage policy is created and associated with the new cluster and it’s based on the availability (FTT) decided during the creation wizard, which corresponds to the following vSAN and vSphere settings:

| Performance | Stripe Width | Flash Reserve | Object space reservation |

| Low | 1 | 0% | 40% |

| Balanced | 1 | 0% | 70% |

| High | 4 | 0% | 100% |

| Availability | vSAN FTT | vSAN FD | vSphere HA | Max Size |

| None |

FTT=0 3 hosts min | No | No | Cluster maximum |

| Normal |

FTT=1 4 hosts min | No |

Enabled % based admission control | Cluster maximum |

| High |

FTT=2 5 hosts min | No |

Enabled % based admission control | Max hosts available in one rack |

During a WLD creation a new vCenter Server, NSX Manager and three NSX Controllers are spun up. The former two reside on the Management Cluster (aka MGMT WLD) the latter on the new WLD itself.

Problem

I recently run into a situation where a WLD was initially created with Peformance=Low and Availability=Low (for testing) but later the customer realised the need to increase the FTT – for obvious reasons that don’t need explaining …

Long story short, you simply create a new VM Storage Policy and re-apply it to the existing workloads, easy-peasy.

However the only exception are the NSX Controllers which you can’t edit – that’s a normal behaviour for service VMs. Trying to re-apply the storage policy to the controller would return the following error:

Two ways to resolve the problem are:

- Hack the VCDB database and remove the vm from the table vpx_disabled_methods which I covered before in this article

- William Lam wrote a nice post on how to use the vCenter MOB to enable vim.VirtualMachine.reconfigure

However using one of these two procedures might not be very well accepted by customers specially on productions environments.

Worry not! There’s an even easier solution which I used: simply redeploy all the controllers, one at time making sure the cluster peering is re-established before moving to the next one.

but…

Since it’s a VMware Cloud Foundation cluster I wasn’t sure if messing around with the controllers would break the back-end Cassandra database; in other words whether or not SDDC Manager stored NSX controllers details for workload domains.

Checking Cassandra for NSX Controller tables

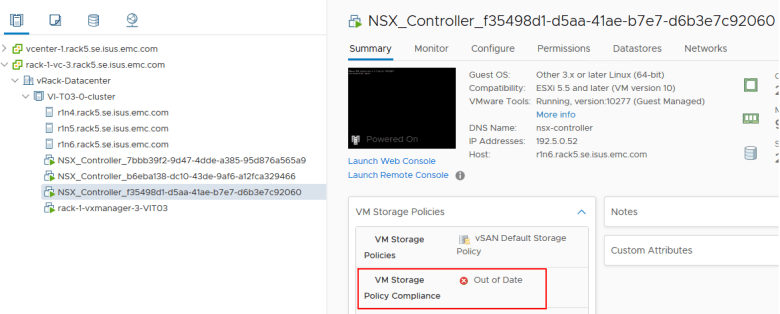

The controller VM display name ending with “92060” IP is 192.5.0.52

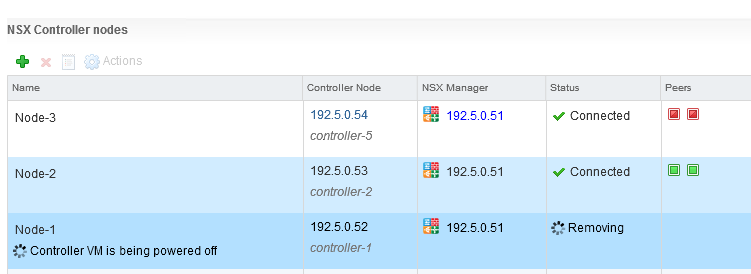

Which corresponds to controller-1 (display name Node-1) on NSX Manager 192.5.0.51

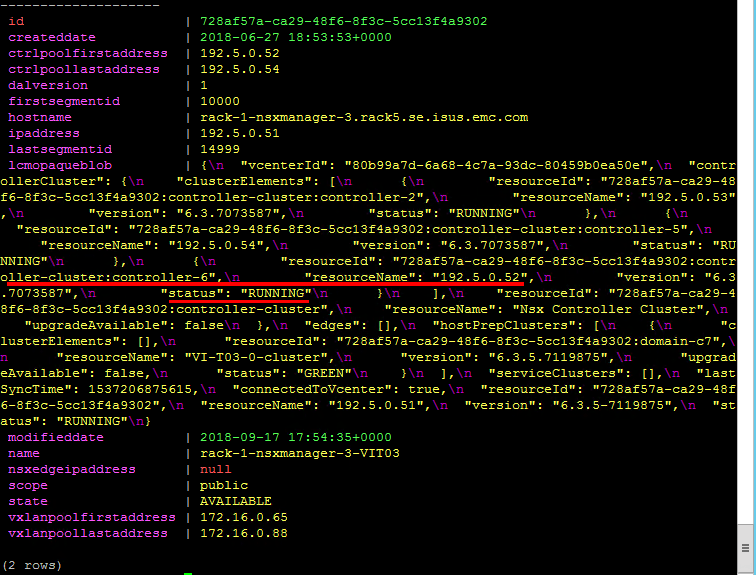

Let’s check what information Cassandra stores

select * from nsxcontroller;

The table nsxcontroller does not store controllers details for workload domain other than the MGMG WLD. Now let’s check the nsx manager table:

select * from nsx;

Also notice the AVAILABLE state. Now I’m going to redeploy controller-1, and by that I mean a delete + redeploy operation

and it’s gone

Checking again Cassandra we can see controller-1 is gone

adding Node-1 back

node deploying

192.5.0.52 redeployed as ID controller-6

The controller storage policy is now complaint

and Cassandra database is happy again

** UPDATE 18/09/2018 **

After further investigation clicking around the the SDDC Manager GUI I spotted the following

so basically Cassandra stores high level details for the VM storage policies in the domain table

In my example when the VI WLD got deployed had FFT=0 and I changed it to 1 at a later stage. According to the GUI this translates to the label NORMAL (instead of LOW)

PLEASE NOTE: editing the SDDC Manager database it’s not supported so you must engage VMware Global Support Services (GSS) in order to fix it

However for sake of “IT science” I will demonstrate that a very simple SQL update statement it’s all you need here.

update domain set redundancy='NORMAL' where id=253e6629-de26-410e-93bf-09132a862546;